My emerging heuristics for assessing AI Design

Best practices that make interacting with AI easy, valuable, ethical, purposeful, and delightful.

How do we define "good" AI Design?

This question has been swirling in my head every time I interact with a new AI-powered product or feature.

It has to be usable, both in its raw form and within the context of whatever product or wrapper you're accessing it from. Thirty years in, Jakob Nielsen's Ten Usability heuristics remain as useful and relevant as ever.

It has to be familiar, fluid, and understandable, so we can "converse" with it or direct it without requiring significant code-switching. Erika Hall's Conversational Design guides us to consider how we can design programs that speak human fluently through universal principles.

It has to be ethical and trustworthy, so we can responsibly and securely use it in different contexts. I've been following Yaddy Arroyo's explorations into AI Ethics, which offer a blueprint for evaluating how ethics shape the user experience along their entire journey.

Each of these frameworks covers an aspect of AI design, but there's a missing piece for me: Our relationship to AI has changed.

Until recently, our relationship with AI was one-directional.

AI could predict what information we were searching for, but users couldn't direct it back.

Now, that's changed.

Rapid advancements in Natural Language Processing have fundamentally reconfigured the landscape of AI products.

Users can now personalize the AI's instructions, provide contextual feedback in real time, and give references and specific guidelines that describe the outcome they are seeking.

Now, it's a two-way conversation.

But there's a catch. The more autonomy that users have to direct computers to their personal whim, the better they will expect computers to understand them and anticipate their needs.

Perception matters.

As users come to expect AI to adapt to their needs, their inflated expectations can result in a poor user experience if they aren't met.

But this isn't just about perception.

Users have more trust in systems that they can direct, even if the system makes a mistake.

The bi-directionality of working with computers as digital assistants and agents creates a new paradigm for HCI.

This will only continue. Gradually, computers are becoming more autonomous themselves, and more capable of sensing changes in our contexts, emotional states, unconscious needs, etc.

👉 How do we evaluate the UX of AI as it grows more adaptive and integrated into our everyday lives?

Taking a page from Adaptive Systems Theory

Adaptive Systems offer hints for how we can adjust our understanding of User Experience to this new paradigm.

Some of the ways that well-designed AI experiences experiences mimic* these principles include:

Feedback loops: these experiences use mechanisms to receive input from users and adjust accordingly.

Flexibility and Personalization: they give users control to tailor or tune the experience to meet their needs.

Anticipation of needs: they use data to proactively anticipate user needs and improve, "learning" and modifying over time.

Resilience: they treat errors as inputs, and not fail states, since users can redirect the system to get a better outcome.

Context-awareness: they can react and adapt their behavior to different situations and constraints.

*(I say mimic because until we reach true AGI, AI-driven products will always require some sort of user interactivity to adjust and personalize its response systems)

From a UX perspective, we can use these principles to paint a picture of AI interaction design. Like any two-way interaction, AI design relies on loops of information, powered by capabilities that ensure a program has sufficient input to adapt to the user's needs as the conversation progresses:

I need to have the control to craft my input,

I need to receive enough information back to improve my input, and

I need the output to be trustworthy and accurate so I am willing to re-engage.

Evaluating what "good" AI looks like?

Blending all of this together, I've developed my own set of heuristics that I've been using to evaluate AI products, particularly products that include generative capabilities and natural language processing.

These are emerging best practices as I see them, not specific guidelines.

When these are in place, information flows intentionally. It feeds better inputs, better outputs, faster adaptability, and higher trust.

I go through each in detail below. Here's the topline list:

Heuristics for AI design

Purposeful and Needful: The AI solves for a real and significant need in a meaningful way, and makes sense within its surrounding context.

Input Clarity and Ease: It is intuitive and easy for users to build prompts that result in accurate, relevant, and high quality results.

Result Quality and Context: Outputs are clear, accurate, and relevant, and supplemented with additional information to enhance user comprehension and provide helpful context.

Customization and Tunability: Users can fine-tune their inputs to easily generate outputs that match their specific needs and expectations.

Branching and Recall: The AI maintains conversational context throughout or across interactions, allowing users to explore different paths and easily return to the main thread.

User Autonomy and Control: Users maintain control over the AI through mechanisms that let them guide, direct, and control the interaction.

Logical Transparency: The AI clearly communicates its decision-making processes, improving user comprehension and trust.

Continuous Learning: The AI continuously improves, learning from user feedback and data to enhance its functionality.

Ethical Integrity and Trustworthiness: The AI adheres to ethical standards, minimizes bias, protects privacy, and ensures transparency to foster user trust.

Identification and Honesty: Users can distinguish AI inputs and outputs from human-generated content. The AI is honest and transparent about its capabilities and constraints.

#1 Purposeful and Needful

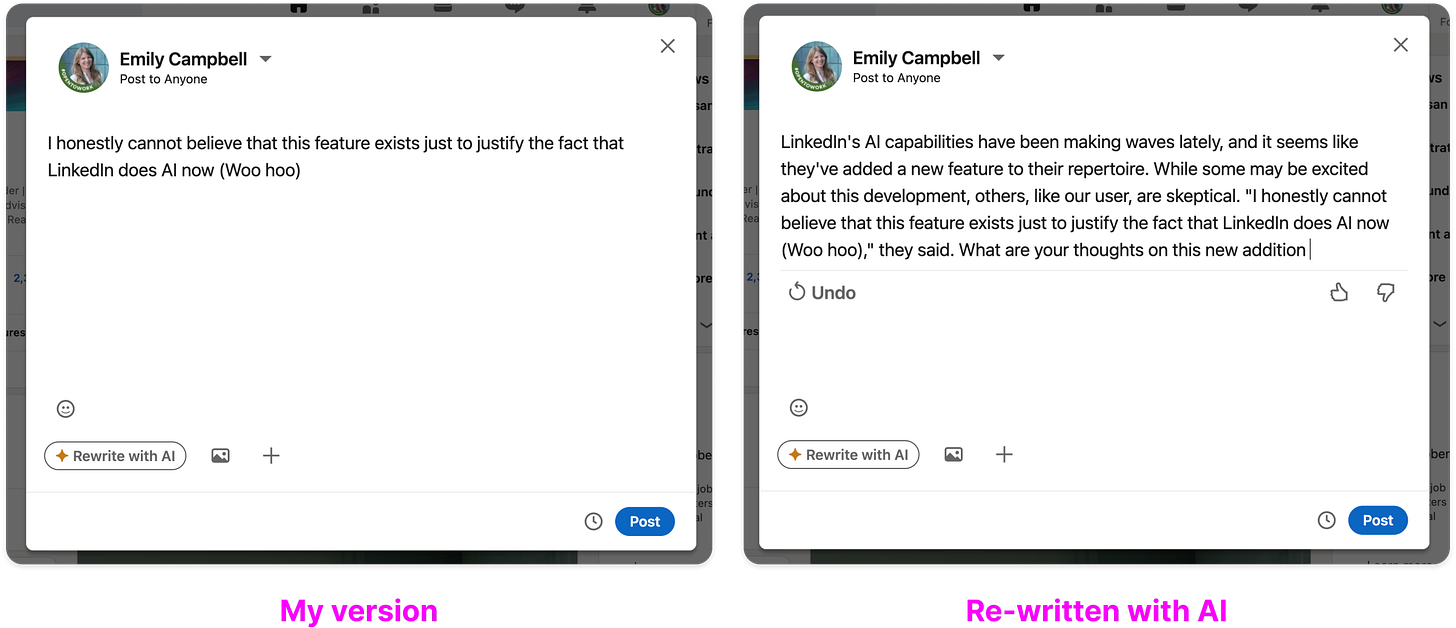

The AI solves for a real and significant need in a meaningful way, and makes sense within its surrounding context.

We are in the "put a bird on it" moment in the AI hype cycle. It's a race to the starting line. For every product implementing a useful feature that makes work or life easier through AI, there are 3 more examples of meaningless clutter pushed on us to show that the company can DO AI.

The list of problems that AI can be applied to will continue to grow as the technology evolves. The importance of introducing this technology only when it's purposeful won't change.

Sample Assessment Criteria:

Could this task be successfully completed just as fast without AI?

Are the outcomes significantly better?

Does this exist to solve a human need or a business need first?

Are the incentives behind the implementation of this AI clearly centered on user needs?

#2 Input Ease and Effectiveness

The AI allows users to easily and intuitively craft a prompt that is likely to give them a useful response.

The giant input box that asks "what do you want to build today?" has become a gimmick in AI platforms. After a few interactions, users quickly realize they will need longer and more specific prompts to return an output that meets their needs. Or, they disengage.

There's a gap between the simplicity of this common UI pattern and the required complication of an effective prompt. (See Microsoft's training video on advanced prompting as an example)

Great experiences carry the load for the user by mapping the input type to the context (not everything needs to be open text), allowing references or examples to reduce how much information needs to be manually inputed, and using suggestions, templates, and other wayfinding devices to help the user craft an input that works.

Great AI products that don't center on the generative input make it easy to "call" AI (GitHub co-pilot or Figma's Figjam assistant), craft inline prompts or inputs (Klu.ai's playground or Notion's AI-prompt column-type), and re-generate the request (Midjourney's image regeneration button).

Sample Assessment Criteria:

How many attempts would a user need to take to get an output that feels right

What guidance does the system offer to help the user?

How does this vary depending on where the user is in their customer lifecycle, their capabilities, or their preferences?

#3 Result Quality and Context

Outputs are clear, accurate, and relevant, and supplemented with additional information to enhance user comprehension and provide helpful context.

If a user can't asses whether the AI provided an accurate and reliable result, it's not a useful or usable system.

Quality results are partially a result of great inputs, which is why Input Ease and Effectiveness matters. However the choice of model, programming of the model, underlying prompts etc also shape the outcome. The difference is, a user can't see or control these.

A user also can't control the data that a response is based on, which is why showing the results or letting a user trace back to them through other means is critical to helping them assess the accuracy and usefulness of the output.

Sample Assessment Criteria:

How often does the system exclude information that should be included? How often does it hallucinate or give false information?

Do the results remain accurate throughout the conversation or do they degrade?

Are sources and other footprints provided to help a user identify the source of information?

#4 Customization and Tunability

Users can fine-tune their inputs to easily generate outputs that match their specific needs and expectations.

AI that personalizes its results to the user is central to adaptive UX. Particularly in generative settings, users will expect that the AI produce content that matches their voice and tone, technical level, and overall brand.

Overtime, AI will learn to adapt these personalized user preferences and characteristics automatically. For now, good AiUX allows users to explicitly set their own parameters and tweak their settings to ensure the AI's output meets their needs.

These personalized nudges can be set at the conversational or thread level, or targeting a specific prompt:

At the overall conversational level, a user may choose to constrain results to a specific modality, to capture a specific tone of voice in a generative output, or define which model to interact with.

At the prompt level, a user may direct the AI to set a specific length for its response, capture certain tokens, or weigh one token to attribute over another.

Sample Assessment Criteria:

Can the AI consistently reproduce outputs that follow specific characteristics, and then maintain those characteristics throughout a conversation?

Does the AI system provide clear guidance on how to adjust settings for optimal results?

If a user intervenes to direct the AI on a specific way to tune its output, how responsive is the AI to that direction?

#5 Branching and Recall

The AI maintains conversational context throughout or across interactions, allowing users to explore different paths and easily return to the main thread.

Natural conversation is not linear, and we should not expect that a user's interaction with AI will be either. AI can support the affordances of dialogue by making it easy for a user to follow a thread and then return to the main branch of a conversation.

This is not limited to AI Conversations. Giving users the ability to reference other threads leads to a more fluid and human interaction. For example Midjourney allows users to reference previous results when crafting new ones to inform the AI of their intent. ChatGPT's introduction of GPTs that can be mentioned in other conversations allows the user to direct the AI without leaving the context of their main thread.

Sample Assessment Criteria:

Does the AI effectively maintain context over interactions?

How easily can users navigate through complicated conversation paths and find their way back to previous comments?

How easy is it to reference or find older thoughts or comments?

Is the AI constrained to a single thread or interaction or can it reference information in other threads to remix with?

#6 User Autonomy and Control

Users maintain control over the AI through mechanisms that let them guide, direct, and control the interaction.

Good AI is human centered: solving real human needs in a personalized way. As the technology advances, it is critical that users retain the ability to explicitly direct the AI.

At the surface level, this could be as simple as "stop and go" controls that allow users to abort generation of a result if its going off the rails. Implicitly, users will come to expect advanced Natural Language Processing in their conversational interfaces, giving them the ability to guide the AI to a specific output without having to learn to speak the computer's language.

Sample Assessment Criteria:

Can the user start or restart a Generative AI result if it's not meeting the user's need mid-prompt?

Can the user direct the AI through a list of instructions (visit this link, review my format) before beginning a response? How frequently does the AI ignore these instructions?

Does the AI act autonomously or follow the user's lead? Does the AI get lazy?

Can the user exclude specific tokens, sources, or other inputs from the final product?

#7 Logical Transparency

The AI clearly communicates its decision-making processes, improving user comprehension and trust.

Transparency is critical to building user trust. An AI that can articulate "how" and "why" it generated some result empowers a user, making the technology more approachable, reliable, and configurable.

The clearest issue with transparency relates to the opaque training data of popular Large Language Models (see the NYTimes lawsuit against OpenAI, or the inability of the OpenAI CTO to specify the training data behind their new video generation model).

This extends beyond elucidating the logic behind a specific result. Other considerations include:

Training data and ethics

Efforts to combat bias in the modal

Limitations to the model's results

Advice for how to improve the results

Within smaller and proprietary models, this is more an issue of being able to work backwards from a result to improve the model itself. Understanding how AI came up with a result gives us critical inputs to improve the results.

Sample Assessment Criteria:

Can the AI articulate how it arrived at a specific answer?

If it gets information wrong, can it explain why or does it only correct its result?

Can the AI give the user instructions for how to get better results?

#8 Continuous Learning

The AI continuously improves, learning from user feedback and data to enhance its functionality.

Adaptive systems can learn and adjust to meet an infinite number of contextual clues and user needs. Without the ability to learn from each of these adjustments, AI will rely on user input to improve its results.

Direct input from users takes work and is prone to error. The faster and more reliably AI can learn, the more personalized and human-centered each subsequent interaction can become, and the faster AI can deliver more agentive capabilities.

In addition to learning the preferences of its users, AI can support more scalable systems that improve over time by learning more efficient or effective ways to produce the results users want.

Sample Assessment Criteria:

Does the AI reflect a growing understanding of the user over time?

Can the AI recall the user's adjustments and improve its results?

Do the AI's learnings persist beyond single interactions?

Does AI anticipate emerging user needs and preferences before the user is self-aware of them?

Does the AI begin to proactively anticipate and avoid error states?

#9 Ethical Integrity and Trustworthiness

The AI adheres to ethical standards, minimizes bias, protects privacy, and ensures transparency to foster user trust.

As interfaces change from being screen-based to conversational, our understanding of minimalist design and aesthetics must evolve. Ethics have traditionally been considered an aesthetic aspect of design, as the reflection of how something looks and feels should reveal the functional incentives, biases, and constraints of its builder.

Ethical integrity goes beyond the model and implementation itself. Other considerations for the ethics of the technology include:

how the model acquires new data to train on,

clarity in its documentation,

multi-modality of its outputs to support users with different physical or cognitive abilities

and more.

Trustworthiness is both a reflection of the transparency of the model, and of the reliability of its results. Proactive error avoidance, continuous learning of user preferences, and logical guidance to improve inputs and outputs feed into user trust.

Sample assessment criteria:

Is the product transparent about the underlying model it uses and the model's limitations?

Can users control how their data is used and accessed?

Is the model's owner transparent about any underlying biases and the actions they have taken to adjust for them in results?

Does the AI support multi-modal inputs and outputs ensure it is accessible to users with different capabilities and circumstances?

#10 Identification and Honesty

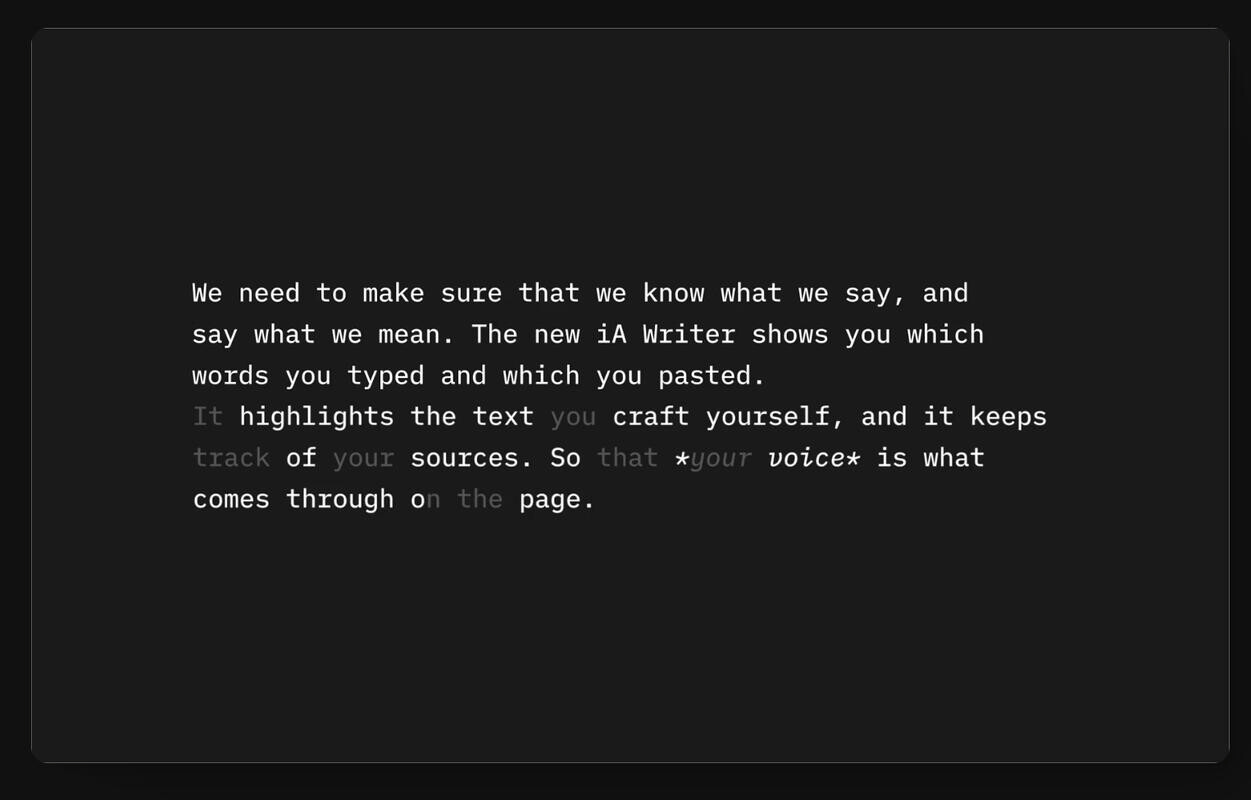

Users can distinguish AI inputs and outputs from human-generated content. The AI is honest and transparent about its capabilities and constraints.

Imagine a conversation where someone wasn't who they said they were, or were intentionally concealing their knowledge about the topic of discussion.

For AI experiences to be human-centered, users must know at all times when they are interacting with an AI or with AI-generated content, and what the limitations of the AI are.

Furthermore, AI should be honest about what instructions it is following and when it is acting autonomously. Individual users may choose to allow AI to interact with them in a less bounded way. In this case, defaults matter. Granting more freedom to the AI should be a preferences that someone can opt into so no one is caught off guard, unable to find the boundaries between person and machine.

I especially love how LinkedIn AI rewrote your post.

Great post and line of inquiry at the intersection of AI and UX - important work.